Blog

This blog post goes more into depth on how the rate limits work on our services and the issues we faced when trying to implement a large-scale, clustered rate limiting system using Redis Cluster. We wanted to have flexibility in the future so we also built a Redis Cluster in-memory mock. This helped us both test our approach and choose when we deploy a new service, whether we want to have tightly coupled in memory rate limits or have them handled by a Redis Cluster.

We recently released a commercial API which grants higher rate limits to different user levels. Initially, the concept seemed relatively straight forward, all you do is increment a few numbers, right?

As the project progressed, we realised that rate limiting at scale is not as easy as it seems

Rate limiting is used extensively throughout our services to ensure the infrastructure, particularly our streamers and APIs, aren’t abused. Our APIs limit how many calls can be made at different time periods per user / IP address, in order to protect servers from being overloaded and maintain a high-quality service for over one million unique daily clients.

Previously, our API servers sat behind a load balancer based on a sticky IP and all requests were rate limited per server and stored in the memory of each virtual machine. This was a very quick and easy way to rate limit malicious users of our API but there were three major drawbacks with this approach:

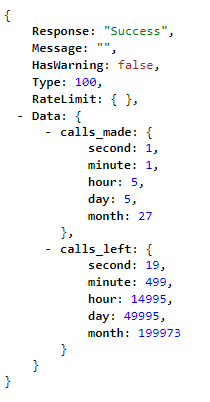

Because of these limitations, we decided to store the rate limits for all users in a Redis Cluster. This would allow us to have a better understanding of how users use our APIs and how frequently they call. Rate limits were implemented for each second, minute, hour, day and month and we also store a user’s historical hourly, daily and monthly calls and overall calls made.

Each request sent to our API is added to the rate limit data store. When a client requests data from our API servers, it first checks if an auth key or an API key has been sent with the request in the header or as a URL get param.

If there is no valid API / auth key in the request or the auth key passed is expired, we fall back to rate limiting against the IP. Rate limits against an IP are lower than rate limits for an account. When it comes to IP rate limits in the best case scenario, each call executes at least 7 Redis commands, increase by 1 the second timestamp key, the minute timestamp key, the hour timestamp key, the day timestamp key, and the month timestamp key. It also increases your overall call number and updates your last hit timestamp. In the worst case scenario, at the end of each month, an additional 5 Redis commands are executed to clear the previous month’s API calls. For ease, all IP monthly rate limits begin at the start of the month (ie limits all start and end at the 1st of each month).

6 HINCRBY > increase current second, current minute, current hour, current day, current month, account all time calls

1 HSET > set last time user use API

5 HDEL > delete the previous second, minute, hour, day and month

Redis commands executed for an API call based on IP

An example response from the rate limit endpoint

Things get a bit complex when an auth key is passed

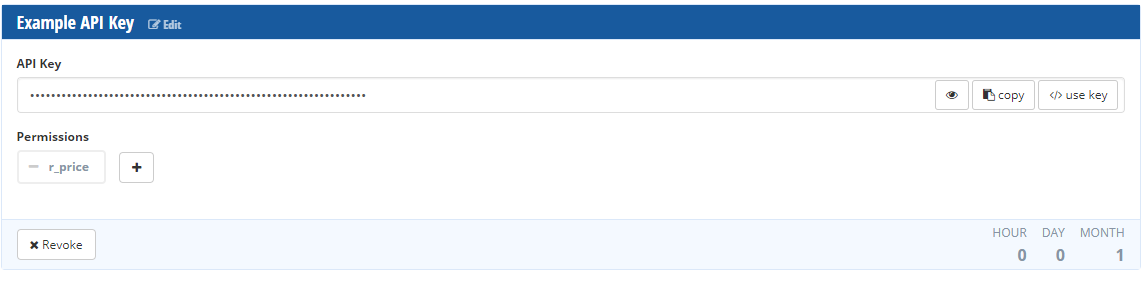

We wanted to give users the ability to have multiple API keys for an account which can have different read and write permissions and because of this, we decided to keep a record of the user’s account rate limit and the rate limits for each of their auth keys.

If a valid API key is sent, the user data is fetched from Redis to retrieve their maximum rate limits for their subscription, the amount of times they have currently called the API and the request is added to the rate limit information for the user. Similar to the IP rate limits, we increment the second, minute, hour, monthly and all-time rate limits.

However, this is done for the user’s account and the API key sent. On top of this, we wanted to store the user’s historical API calls and so at the end of each hour, day and month, the user’s historical rate limits are also stored. In the worst case scenario, 23 Redis commands are performed on a Redis Cluster.

Unlike IP rate limits, account rate limits are based on the day when the user first subscribes and not on the 1st of the month. We had a long debate on whether to give subscribers month-to-month rate limits (and pro-rata charge them for the first month) or give them a month from the day of subscription. We decided that subscription day to subscription day makes more sense. This complicated the code base a bit more as we need to handle subscriptions on the 29th — 31st and make sure they end at the correct day of the month. Somebody subscribing on the 30th of Jan will renew on the 28th of February, 30th of March and so on.

6 HINCRBY > account second, account minute, account hour, account day, account month, account all time calls

4 HINCRBY > API key hour, day, month, all time calls

2 HSET > last account call time, last API key call time

5 HDEL > previous account second, previous account minute, previous account hour, previous account day, previous account month

3 HDEL > delete the hour, day, month keys for the API key passed

3 LPUSH > store historical API Calls

Redis commands executed for an API call with a valid auth key

Calculations and Data Storage Architecture

As mentioned previously, in the worst-case scenario 23 Redis commands are executed for each request for data to our API and with our infrastructure needing to handle 50,000 calls a second at peak, that is over one million Redis commands. It was a lot more straight forward than expected to set-up the Redis cluster, we mostly followed the Redis Cluster documentation.

Redis also comes with a useful benchmark tool, (conveniently name Redis-benchmark), which lets you determine how many operations can be performed on a given Redis machine a second.

redis-benchmark -n 10000 -t hset

Using this, we can approximate how many requests a second a machine in our cluster can handle.

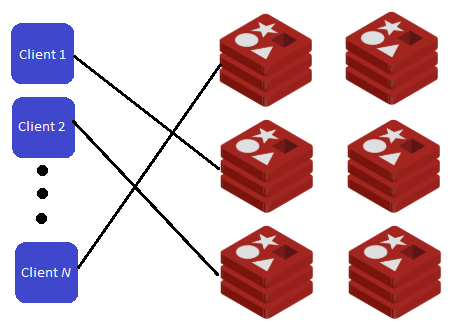

Due to the number of calls we receive, we decided to share/shard the data across a Redis Cluster consisting of six nodes in a master-slave configuration. A hash function is applied to the user id and the API key in order to determine which node to send the request to (all handled by redis-clustr).

Our general structure is {IP}_service, {user_id}_api-key_service. Examples for the price api, with the codes _p, _p_api, _p_auth:

Each node in the cluster runs at around 50% capacity and we have the flexibility to scale up the machines or add more machines to the cluster when the capacity reaches 80%.

For monitoring, we use Grafana and Icinga.

We also keep track of the unique/total calls for each endpoint, the unique/total calls for each API instance and the unique/total calls across all servers.

Disclaimer: Please note that the content of this blog post was created prior to our company's rebranding from CryptoCompare to CCData.

Get our latest research, reports and event news delivered straight to your inbox.